In the effort to determine if Hans Niemann is guilty of cheating, the chess world has moved on to the only thing less reputable than anal beads: statistics. According to analysis by an anonymous Chessbase user called gambit-man, it appeared that Niemann has an unusually high number of games with 100% engine correlation.

The analysis was initially popularized in a YouTube video by FM Yosha Iglesias. It was then picked up by Hikaru Nakamura. Even the New York Times commented that, “At times, his play is so accurate that it leaves audiences and opponents alike in disbelief.” In the past week, these numbers became the centerpiece for the case that Niemann cheated in over-the-board chess. There was just one problem: no one seemed to know what they meant.

That was a big deal, because the results were quite sensitive to the particular choice of metric used in the analysis. Hans’ average engine correlation did not seem to be out of the ordinary, but he had unusually many “perfect” games with a 100% score (and perhaps too many with 90%+). This does sound suspicious. Anyone could play a good game, but a perfect game must require engine assistance, right?

But there’s an obvious next question: What does this score mean? How is it calculated? 100% of what?

Your first thought might be that engine correlation is the same thing as engine accuracy. If you’ve ever analyzed one of your games on Lichess or Chess.com, you’re familiar with accuracy. It’s a 0-100 score that represents how close you came to the best moves according to the engine. You can find details of exactly how Lichess calculates accuracy here.

But as it turns out, engine correlation is not the same as accuracy. Here’s a Lichess study of all of Hans’s 100% engine correlation games. The games all have high scores – mostly in the 90s – but not 100. Already, things are looking a lot less suspicious. A good player can play with accuracy in the 90s, especially if their opponent does not play well. I have many bullet games with accuracy over 90.

Additionally, according to Stockfish 15, the engine Lichess uses for analysis, these games contain inaccuracies and even outright mistakes by Hans. How does a game with a mistake in it get a 100% score on engine correlation?

When I asked on Twitter how engine correlation works a few days ago, no one seemed to know. This was probably the most helpful reply.

But in the past few days, as more people have looked into it, the details have mostly been figured out. Basically, it goes like this:

Users upload their engine analysis.

Other users can access that analysis.

For each move, if it matches the top move of any engine that’s been uploaded, it counts as a hit. Otherwise, it counts as a miss.

The engine correlation is the percentage of total moves that are hits.

The motivation of the feature is to give users access to deep analysis from strong engines. This makes sense: it can take a long time to analyze a position to high depth, and there’s no reason for everyone to be burning their computing power analyzing the same positions over and over. But the feature is not designed to catch cheaters. In fact, the Chessbase documentation explicitly says this feature should not be used for cheat detection.

There are a couple of problems with using Let’s Check for cheat detection.

First, it’s inconsistent. The engines available for any game depend entirely on users sharing their analysis, so the number and type of engines will differ. Clearly, the more analyses that have been uploaded for a game, the higher the chance that one of them matches the move played. This means that the more scrutinized a game is, the higher the chance it will get a score of 100%. Currently no one is under more scrutiny in the chess world than Hans Niemann, so that’s already a huge source of bias.

Second, it’s possible to manipulate the scores. It does not appear that Chessbase vets the analysis.

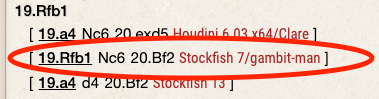

And if you look at Niemann’s 100% games, this appears to be exactly what happened. Some of the moves only match with old or unusual engines, and many of these were uploaded by the Chessbase user gambit-man, the guy who did the analysis in the YouTube video. In other words, the guy who did the analysis implicating Niemann personally uploaded engines to get the score to 100. I initially saw this pointed out by the reddit user gistya.

For example, some of Niemann’s moves only match with Stockfish 7 analysis provided by gambit-man. The current latest version is Stockfish 15; Stockfish 7 came out in 2016. Others match with "Fritz 16 w32/gambit-man" (the current version is Fritz 17) and other outdated or unusual engines.

Now, it’s true that a clever cheater could use an older engine to avoid detection. But it’s also true that the more engines you try, the more likely you are to find one that matches a certain move. At the very least, if this is to be taken as evidence of cheating, the same process needs to be applied for other players to create an apples-to-apples comparison. This hasn’t happened.

Review

Let’s recap what we’ve covered so far.

The Let’s Check engine correlation is a poor metric for cheat detection because it’s not consistent between games. Games that receive more attention will have more analyses uploaded, increasing the chances that one matches the moves in the game.

It’s possible for individual users to manipulate the scores. This appears to have happened with Niemann’s games.

When you account for this effect, the “perfect” games become merely very good games, which is far less suspicious.

My Analysis

While I clearly disagree with Yosha Iglesias’s analysis, she did one thing that I admire: showed her work. She laid out her reasoning very clearly in the YouTube videos and provided a wealth of supporting documents in the form of spreadsheets, links to game analysis, etc. This was a welcome change from the secretive nature of a lot of the anti-cheating work currently done in chess and made substantive disagreement possible.

With that in mind, I wanted to do my own analysis. This is certainly not intended as definitive, but rather as a baseline that others in the community can pick up and run with. For that reason, I’m sharing all the data I used and code I ran.

I started with a few requirements:

With data the question is always, “Compared to what?” Statements about Niemann’s games are meaningless without comparisons to other players. In this case, I decided to look at all classical over-the-board games played between 2020-2022 by Niemann, Carlsen, Nepomniachtchi, Erigaisi, and Gukesh. I selected these players because I wanted to compare Niemann to the current strongest players in the world, and other young players who have recently made tremendous progress.

The process should be the same for every player, and it should be decided in advance, before knowing the results. I used Stockfish 15 to evaluate every position from every game played. I then used the evaluations to determine how much the players deviated from the best moves according to the computer.

I focused on move quality rather than engine correlation. Given that the engines are the authorities on good moves, this is a subtle distinction, but it’s an important one. Engine correlation is an “all or nothing” metric. If the player’s move doesn’t exactly match the top choice of the engine, then it doesn’t matter whether the move was a minute inaccuracy or a gross blunder, it gets no credit either way. This makes the analysis extremely sensitive to the choice of engine and the exact parameters the engine is run with. The ability to upload multiple engines mitigates this somewhat, but as we’ve seen, that process is haphazard and ripe for manipulation. A better approach is to focus on move quality by looking at centipawn loss, which measures how much worse the player’s moves were than the engine’s top choice. With this metric, if a move is almost as good as the engine’s top choice, it scores nearly as well. This makes the analysis more robust to engine choice and parameters.

I analyzed every move from every game with Stockfish 15 at depth 18. I then calculated the centipawn loss of each move and compared the performance of the different players.

Results

The average centipawn loss for each player was as follows: Niemann - 25.6, Carlsen - 16.9, Erigaisi - 23.3, Nepomniachtchi - 16.9, Gukesh - 23.4.

Niemann was the least accurate player (though not by much). Carlsen and Nepomniachtchi, the world champion and world champion challenger, were distinctly more accurate than the younger players, who were all at a similar level. Broadly, this is what you'd expect given their ratings and tournament results.

I’ve heard people say that Niemann has been playing more accurately than Carlsen. This isn’t what I found in the data. Over the past three years, Niemann’s play has been substantially less accurate than Carlsen’s, and comparable to other young players who have made big rating jumps in the same period.

Conclusion

Does this prove that Niemann didn’t cheat? No, it just shows that the analysis currently being used to implicate him doesn’t hold up.

It’s very possible that a finer-grained analysis would turn up evidence of cheating. You have to make a lot of choices when it comes to which engine to use, which settings, etc., all of which can affect the end result. This video, for example, argues that Niemann’s progression over time is suspicious. It claims that his centipawn loss has remained constant as he went from 2300 to 2700, in contrast to Gukesh’s, whose centipawn loss declined as you’d expect. This is interesting, but I’d want to double check it and compare it with more than one player.

There are additional reasons to be suspicious. Niemann admitted to cheating online and Chess.com says they have evidence that he cheated more than he admitted to. Online sites have access to additional sources of evidence, such as switching browser tabs, mouse clicks, etc. that could be extremely damning (though this still wouldn’t say anything about his OTB games). Many of his grandmaster colleagues have questioned specific moves or games he’s played. And maybe Magnus knows something we don’t.

Then again, maybe he doesn’t. Even grandmasters aren’t immune from confirmation bias. If you look at someone’s games starting from the assumption that something is fishy, you’re likely to find something that supports your initial belief. In the roughly one month since this controversy started, nothing resembling conclusive evidence against Niemann has been presented. Chess.com has promised an announcement this week. We’ll see.

The thing that struck me when looking over Niemann’s games is his aggression. Most of the top grandmasters like to avoid risks when possible. Niemann seems more willing to take the game into murky territory, and especially to sacrifice material. Maybe he feels confident doing that because he has engine assistance. Or maybe he’s just an unusually aggressive, intuitive player. It would be a shame to derail the career of an exciting player because his style doesn’t line up with what’s currently popular.

Thanks to John Hartmann for help assembling the data used in the analysis, and to Jake Garbarino and Benjamin Portheault for comments on earlier drafts.

This is most welcome. I had not realized that on top of the massive confirmation bias of counting any of multiple cloud engines as a hit at any time, a big feedback loop was operating.

There is one vital factor you're still missing about average centipawn loss. It grows in proportion to how much one side is ahead. Simply said, if you're a Queen ahead, a pawn difference is minimal. One cannot avert this by cutting off at 2.00 or 4.00 advantage, say, because the proportional effect goes clear down to zero. And as I demonstrated in my article https://rjlipton.wpcomstaging.com/2016/11/30/when-data-serves-turkey/, not correcting for this gives you an unrealistic y-intercept for the rating of perfect play.

Great analysis! Until someone comes with substantial evidence of cheating by Niemann, all I see is some of the most powerful entities in chess hammering on a 19 year old, it's a witch hunt.